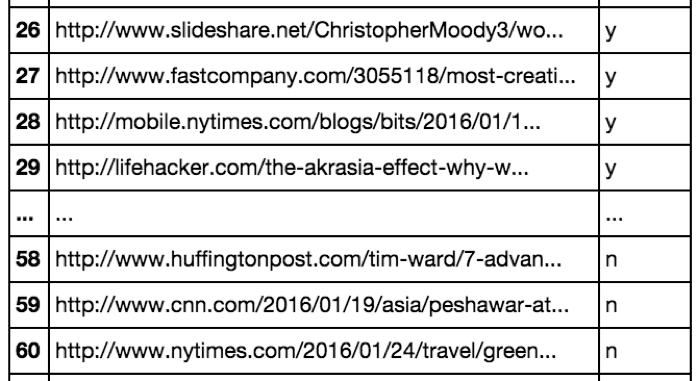

Note: NER may not be a state-of-the-art problem but it has many applications in the industry. We'll then pull their market price data to test the authenticity of the news before taking any position in those stocks. We’ll get the textual data from RSS feeds on the internet and extract the names of buzzing stocks. The goal of this project is to learn and apply Named Entity Recognition to extract important entities (publicly traded companies in our example) and then link each entity with some information using a knowledge base (Nifty500 companies list).

So, let’s get on with it! Follow along and you’ll have a minimal stock news feed that you can start researching by the end of this tutorial.ĪDVERTISEMENT What you’ll need to get started: I’ll cover the important bits in more detail, so even if you’re a complete beginner you’ll be able to wrap your head around what’s going on. It would be helpful if you had some familiarity with Python and the basic tasks of NLP like tokenization, POS tagging, dependency parsing, and so on. There are no real pre-requisites as such. In this tutorial post, I’ll show you how you can leverage NEL to develop a custom stock market news feed that lists down the buzzing stocks on the internet. And we can use NER (or NEL - Named Entity Linking) in several domains like finance, drug research, e-commerce, and more for information retrieval purposes. Information retrieval has always been a major task and challenge in NLP.

Uploading a document and getting the important bits of information from it is called information retrieval. Getting insights from raw and unstructured data is of vital importance. The output is given with UTF-8 charsets, if you are scraping non-english reddits then set the environment to use UTF - export LANG=en_US.One of the very interesting and widely used applications of Natural Language Processing is Named Entity Recognition (NER). Reader return RedditContent which have following information ( extracted_text and image_alt_text are extracted from Reddit content via BeautifulSoup) - RedditContent: # If `since_id` is passed then it will fetch contents after this id # If `after` is passed then it will fetch contents after this date # fetch_content will fetch all contents if no parameters are passed. Since_time = datetime.utcnow().astimezone(pytz.utc) + timedelta(days=-5)

# To consider comments entered in past 5 days only Now you can run the following example - import pprintįrom reddit_rss_reader.reader import RedditRSSReader For example to fetch all comments on subreddit r/wallstreetbets. RedditRSSReader require feed url, hence refer link to generate. Install from master branch (if you want to try the latest features): git clone Install via PyPi: pip install reddit-rss-reader For serious scrapping register your bot at apps to get client details and use it with Praw. *Note: These feeds are rate limited hence can only be used for testing purpose. For more details about what type of RSS feed is provided by Reddit refer these links: link1 and link2. It can be used to fetch content from front page, subreddit, all comments of subreddit, all comments of a certain post, comments of certain reddit user, search pages and many more.

/185085940-56a7b0bd5f9b58b7d0ecea28.jpg)

This is wrapper around publicly/privately available Reddit RSS feeds.

0 kommentar(er)

0 kommentar(er)